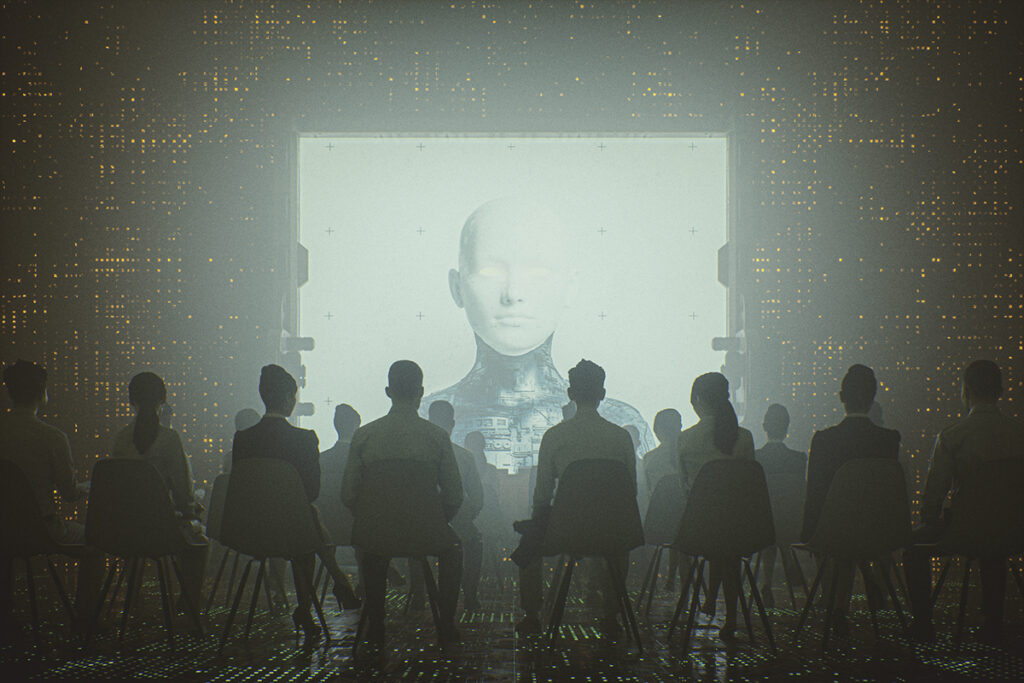

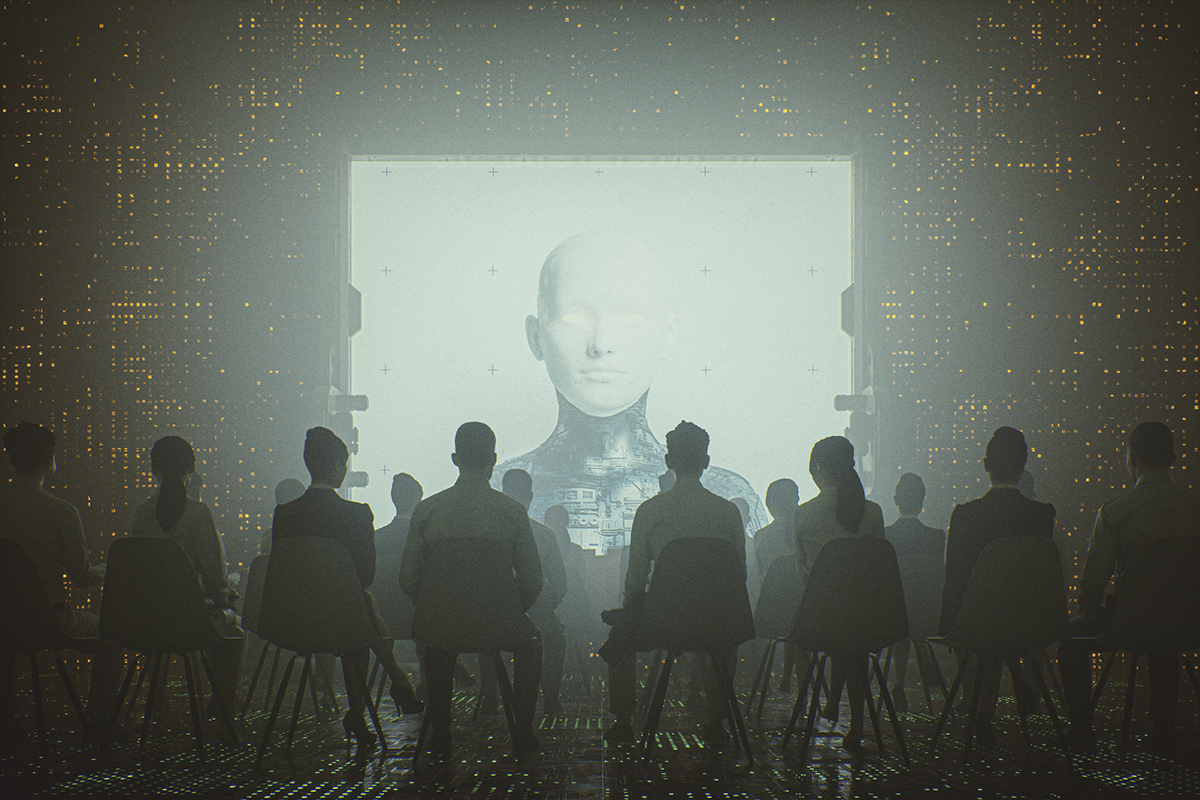

3 Myths of Artificial Intelligence: The Hidden Bias Behind AI Tools

By Amy Chivavibul

The age of Artificial Intelligence is among us.

Open AI’s ChatGPT now has the fastest-growing user base for a consumer app, reaching 100 million users within just two months of the app’s launch.

In the healthcare industry, doctors are using AI tools to help complete heavy paperwork and prevent burnout. And AI is already being trained to detect cancer in CT scans and X-rays.

As Artificial Intelligence transforms how we work, its global revenue is forecasted to reach $134.5 trillion by 2030 – that’s over 35% more than the forecasted revenue for Big Data.

Despite AI’s rapid growth, data ethics researchers have demonstrated that these technologies can be a double-edged sword: a tool with immense potential for good, but also a tool that can contribute to systemic bias. As AI tools become an “everyday” part of our lives, we must view them with a critical lens and consider the following misconceptions:

Myth #1: Artificial Intelligence is neutral.

It can be easy to assume AI outputs are free from human bias. Yet AI algorithms rely on data from the real world, which means that existing biases can be replicated and amplified.

Lack of representation of Black, Indigenous, and People of Color (BIPOC), for example, continues to be an issue in the digital world.

The multicultural agency Lerma found that generative AI tools created stereotypical and distorted results when prompted to generate pictures of Hispanic and Latin American people. Even after regenerating prompts, the agency received troubling AI outputs: images of mustached males wearing sombreros, and a Latin American figure wearing a mariachi hat while eating a taco.

In 2020, Twitter’s former ethics team, META (Machine Learning Ethics, Transparency, and Accountability), found that the app’s image-cropping algorithm focused more on the faces of white individuals than the faces of Black individuals. The team was one of the many that were eliminated after Elon Musk gained ownership of Twitter in 2022.

Beyond image-generation and cropping, algorithms have created racially-biased outcomes that threaten the lives of Black, Indigenous, and People of Color (BIPOC): ProPublica found that the COMPAS (Correctional Offender Management Profiling for Alternative Sanctions) criminal risk assessment and decision support software, used by US courts, incorrectly identified Black defendants as higher risk for future crime in comparison to white defendants who committed similar crimes; the crime prediction software PredPol disproportionately targeted Black and Latin American neighborhoods for daily police patrol; misuse of facial recognition has led to wrongful arrests of at least five Black individuals, and one health system reduced the quality of healthcare for Black patients by underrating health risk scores of Black patients.

Different AI tools, depending on the data upon which they’re trained, can even have varying notions of empathy, race, identity, and ethics. Simple tests, such as feeding different chatbots the same prompt, can expose the bias embedded in different large language models (LLMs). LLMs are algorithms that specialize in natural language processing (NLP), giving chatbots like ChatGPT the ability to recognize, translate, predict, and generate text.

Jeff Hunter, a leader of AI innovation at Ankura, works closely with LLMs. Hunter and his team test LLMs by using the Voight-Kampff Test from the groundbreaking science fiction film Blade Runner: “You’re sitting down watching television and a wasp lands on your arm. What do you do?”

“We’ve taken that simple question, and we use it on every new version,” says Hunter. ”So from GPT, 2.0 through 4.0, all the variations of Bard, and so on and so forth, and we asked them that exact same question. And the answers are so different from one end of the spectrum to the other… And that’s partially based on the bias of the data they use to train the model, their [the designer’s] own bias, and how they set up the tone [and] temperament of how the model should operate.”

Hunter explains, “Some of the answers were simply, ‘I would just kill it.’ We were never expecting the large language models to say ‘kill.’ But on the other spectrum, one large language model responded saying, ‘What do you mean by a wasp?’ It thought we were talking about a White Anglo-Saxon Protestant (WASP).”

Hunter’s findings – where one model uses the word ‘kill’ and one model mistook the term ‘wasp’ (insect) for a White Anglo-Saxon Protestant (WASP) – is a testament to how LLMs are not all created equal. Rather, each AI system exhibits its ideas of empathy, race, identity, and ethics in different ways.

Myth #2: Artificial Intelligence is only about the future.

The excitement around the future of Artificial Intelligence often shrouds the fact that AI tools are dependent on our present and past.

After all, tools like ChatGPT use both historical and real-time data to make predictions. With Myth #1 in mind, it’s important to critically examine precisely what historical data is used to train these powerful models.

ChatGPT (GPT-3), for example, used the Common Crawl dataset, which contains petabytes of open-source web data. According to Hunter, the Common Crawl dataset is “basically the largest collection of Internet data ever put together.” But it’s all relative: “Only about 4 to 6% of the world’s data is indexed and searchable or scraped by all these companies. So there’s a lot of other data out there that they didn’t use,” says Hunter.

This statistic recalls Myth #1 and the issue of representation. Whose voices and experiences make up the most accessible 4-6% of Internet data? Whose voices and experiences are left out, given the current and past “Digital Divide”? How could past prejudices be replicated? What narratives are being told?

These questions are important to ask, especially since tech tools like AI are implemented in the real, social world, rather than an ideal, futuristic one.

Matthew Bui, an assistant professor at the University of Michigan School of Information, specializes in urban data justice and the racial politics of data-driven tech, policy, and initiatives. Bui explains, “Sometimes people, especially technologists, emphasize the technical features of a specific type of technology: that is, how it works, how it improves on other tech. Yet, these technological devices and systems are deployed in our social world and they take on new meaning as different users and groups engage with them.”

In other words, the social context of technologies like AI are more important than ever. We must have an understanding of the past (and current) social world to implement AI in more just ways.

Consider the “two faces” of wearable technology. According to Chris Gilliard and David Golumbia, Apple Watches and Fitbits are voluntary, luxury items for those who can afford them; yet devices like ankle monitors often subject marginalized populations to increased surveillance.

Social context illuminates how tech can be inequitable, which is why we cannot narrowly think of AI as futuristic technology. AI are products of the human past and are implemented in the current social world.

Myth #3: Artificial Intelligence is completely automated.

After all, it’s in the name: artificial intelligence, machine learning, deep learning. These phrases imply that AI is automated and independent of human intervention and labor.

And it’s true – to an extent.

The large, low-wage, human labor force that supports AI is swept under the rug by the tech industry. AI systems require labeled training data, which doesn’t work unless there are humans who utilize their eyes and expertise to create the label. Data labelers are estimated to make an average of $1.77 per task, while the companies that hire them raise millions in venture capital funding.

Adding further credence to the idea that this labor system is exploitative is that these data labeling companies often hire people from poor and underserved communities, like refugees and incarcerated people.

Technology correspondent for The New York Times Cade Metz investigated iMerit, an India-based company that supplies training data for AI systems. Facilities of thousands of workers are responsible for data-labeling images, from pinpointing polyps in colonoscopy videos to identifying noxious images for content moderation. “What I saw didn’t look very much like the future – or at least the automated one you might imagine. The offices could have been call centers or payment processing centers,” writes Metz in his article.

Consider another way we often view AI as automated: When any new innovation emerges, it can be easy to think that we, as humans, are unable to control the future of technology and are not responsible for its failures that have unintended consequences. This is coined the myth of technological determinism.

In reality, tech executives and regulators have decision-making power in whether AI tools get ruled out to the public. Microsoft and Google, for example, released their chatbots despite their employees finding the products inaccurate and potentially dangerous.

These decisions are not automated or inevitable; rather, the “AI arms race” has made Big Tech leaders prioritize market dominance over caution.

Conclusion

We’re not suggesting to shy away from the use of Artificial Intelligence, but instead we encourage thinking critically about why and how to implement AI.

Is AI being used as a replacement for human decision-making? How are you challenging the objectivity of AI and its outputs? Do you have an understanding of AI’s training data?

Stay tuned for upcoming blog posts to learn more about how AI can be designed and implemented in more responsible and ethical ways.

As for now, consider these three myths before hopping on the AI bandwagon.