How to Use AI Responsibly: 5 Ways to Confront AI Bias

By Amy Chivavibul

AI is not neutral – but there are ways to keep its biases in check.

My previous blog post, 3 Myths of Artificial Intelligence: The Hidden Bias Behind AI Tools, dispelled the myths that AI is neutral, that it’s only about the future, and that it’s completely automated.

AI is already here: Amazon uses generative AI to summarize product reviews, and OpenAI has announced new capabilities that allow ChatGPT to “see, hear, and speak.” Gartner even predicts that conversational AI will be embedded within 40% of enterprise applications by 2024 – a huge increase from the less than 5% in 2020.

AI, while imperfect, is a powerful tool that can improve efficiency – from writing emails and creating Excel formulas to organizing a messy computer desktop.

AI bias must be confronted to create a more equitable world in the Information Age. Follow these strategies to use AI in more ethical and responsible ways:

1. Fact-check and request citations.

Generative AI can instantly create content like emails, poetry, images, and even code. Despite their near-human fluency, AI outputs are unpredictable.

AI can ‘hallucinate‘ and create outright falsehoods: ChatGPT has fabricated citations and made definition statements on uncertain historical events; and applying biased text-to-image models for suspect sketching could lead to wrongful arrests.

When using chatbots and other generative AI tools, fact-checking is essential.

Jeff Hunter, a leader of AI innovation at Ankura, describes one way his team keeps misinformation and bias at bay when interacting with chatbots: “We wrote our own bot capability so that every time there’s a response it lists off the citations at the end, like a book would do, and then we have a validator engine that verifies all those links are real and can find representative data of the answers it gave back to us.”

What does this look like for the average user? Requesting citations in prompts as often as possible.

But fact-checking doesn’t end with just a citation, as Hunter is quick to point out: “Always check your citations. If you can’t verify the source, you can’t verify the answer.”

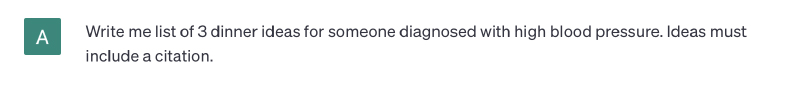

For example, when I prompted ChatGPT to “Write me a list of 3 dinner ideas for someone diagnosed with high blood pressure. Ideas must include a citation,” the chatbot returned a detailed list with accurate-sounding sources.

However, only one of the three links was legitimate. The link to the Mayo Clinic’s DASH Diet took me to a random image on the Mayo Clinic website, and the American Heart Association’s “Vegetarian Chickpea Curry” recipe returned a 404 Error.

The takeaway? ChatGPT and other generative AI tools are not always trustworthy.

2. Educate yourself (and others) on AI bias.

AI can contribute to systemic bias in several ways – from flawed facial recognition technology to models that target Black and Latin American neighborhoods for daily police patrol.

It is important to consider the different social contexts in which AI has disproportionately impacted Black, Indigenous, and People of Color (BIPOC). Learn more about AI bias here.

With this knowledge base, users can empower themselves, becoming active learners and critical thinkers while interacting with AI tools.

Encourage your peers and teams to avoid taking AI at face value, and avoid becoming passive users.

Consider discussing the following questions:

- In what ways could AI distort my decision-making by confirming my pre-existing beliefs and biases?

- What are the wider implications and risks of automating certain tasks with AI?

- How much do we know about how the model was developed and trained?

This is just a starting point. Confronting AI bias requires awareness and a critical eye.

3. Consider the possible bias in training data.

ChatGPT and other popular AI tools require training data – and lots of it. Training data, whether incomplete or unrepresentative of the chosen population, can create skewed outputs. Generative AI tools, for example, have created stereotypical and distorted results when prompted to generate pictures of Hispanic and Latin American people, most likely due to incomplete data that fails to represent a broader Hispanic and Latin American experience.

One exercise when thinking about training data is to reflect on the following questions:

- What groups of people may be over- and under-represented in the training data?

- What experiences and beliefs may be over- and under-represented in the training data?

- How much of the information do I think is accurate and reliable?

Another way to view AI bias is through the lens of cognitive age. How and what the model was trained upon directly impacts AI’s accuracy and level of bias. Hunter thinks about chatbots through the standpoint of Eriksonian development, where eight (8) stages of cognitive development are laid out, from infant to elder.

“We’re starting to grade ChatGPT, and the others, on how far up the scale they’ve matured, which gives us an understanding of how good their answers are,” Hunter explains. “Obviously, if you ask…an 80-year-old and…a 10-year old the same question, you’re going to get two completely different answers.”

Hunter continues, “We’re trying to use our cognitive backgrounds to help ascertain the true ‘cognitive age’ of this large language model we’re using that layers completely on top of ethics and bias. Maturity has a lot to do with ethics and bias. Unfortunately, the older people get, sometimes the more biased and racist they become. [Contrasting that with the earlier stages of cognitive development], nobody is born to be a racist – somebody teaches you that.”

The next time you use a chatbot or other generative AI tool, see if you can get a sense of its ‘cognitive age.’ Does this make you trust the tool more or less?

4. Understand lack of transparency in the AI industry.

The current AI landscape is a black box. While some open-source models exist, most firms keep the development of their AI tools proprietary knowledge, leaving the general public in the dark. Lack of transparency is not only concerning because AI’s enormous influence, but also because of AI’s propensity to perpetuate biases and rely on exploited labor.

Stanford’s Foundation Model Transparency Index is one tool to help evaluate transparency and understand which AI developers align with you and your organization’s principles. The study ranks the transparency of popular AI developers (e.g., OpenAI and Meta) on 100 indicators, including data, labor, and risks.

With a mean transparency score of 37%, the research found that “No major foundation model developer is close to providing adequate transparency, revealing a fundamental lack of transparency in the AI industry” (Bommasani et al., The Foundation Model Transparency Index). More specifically, information on the computational resources, data, and labor used to build AI models are a major “blind spot” among these developers.

In response, transparency is slowly becoming central to AI governance. On October 30, 2023, President Biden released an Executive Order outlining new rules to regulate AI, such as requiring developers of the largest AI systems to share their safety testing results before releasing models to the public.

Still, it is important to be aware and educated about the transparency issue at the heart of the AI and tech industries.

Matthew Bui, assistant professor at the University of Michigan School of Information, reminds us that knowledge is power. He explains, “Tech is expanding a lot, based on the promise it can optimize and make the world better. But many of these data- and tech-driven decisions can be hard to understand. And they don’t always deliver. It’s important the general public knows they can push back and that they do have a voice in how tech gets designed and deployed – and if it can get deployed.”

Pushing for the transparency and democratization of AI is another step towards more ethical and equitable AI usage.

5. Avoid using AI to replace nuanced, human decision-making.

AI is not a model of the complex, human brain, but rather a system of predictive text. “Spicy autocorrect,” as Rumman Chowhury, a pioneer in the field of applied algorithmic ethics, describes it.

Just because we can automate many processes and decisions, does not mean that we should. Human connection, authenticity, and nuance are not obsolete – they’re actually more important now than ever. This is a central tenet of our Empathic Design approach.

It is important to determine for which processes you will apply AI solutions within your organization. For example, instead of using AI to inform life-altering decisions (as in healthcare or law enforcement), AI should instead be directed toward supporting time-consuming, repetitive tasks, and to relieve pain points in workflow processes.

Here are a few helpful ways to use chatbots like ChatGPT:

- Scheduling and time management

- Debugging code

- Idea generation and brainstorming

- Getting feedback on writing

- Exploratory data analysis

- Explaining complex topics

Just remember that AI is a tool, and we need to treat it as such.

Conclusion

No matter how accurate-sounding AI outputs can be, they can’t be taken at face value.

“The first answer you’re going to get is not going to be the final answer you want – it’s a conversation. Have a continuous conversation with these large language models to help you get to a more human-like, accurate response,” Hunter advises.

In other words, interacting with AI cannot be a one-and-done exercise. Responsible use of AI requires fact-checking and a critical eye to get accurate and unbiased responses. It requires awareness of potential sources for AI bias and the widespread issue of transparency in the industry. And it requires an understanding that AI should support – not replace – human decision-making.

After all, if industry leaders are not making the right decisions to confront AI bias, then users need to.

Stay updated with this blog post to learn more about how AI can be designed in more responsible and ethical ways.